By Dr. Bruce Smith ——Bio and Archives--June 25, 2023

HeartlandLifestyles | CFP Comments | Reader Friendly | Subscribe | Email Us

What, exactly is it? From Investopedia:

“The ideal characteristic of artificial intelligence is its ability to rationalize and take actions that have the best chance of achieving a specific goal. A subset of artificial intelligence is machine learning (ML), which refers to the concept that computer programs can automatically learn from and adapt to new data without being assisted by humans. Deep learning techniques enable this automatic learning through the absorption of huge amounts of unstructured data such as text, images, or video.”

There are two iffy concepts in the first line. There’s the ability to rationalize, that is, to decide or choose, and the ability to take action. Further down there’s the idea that computer programs can learn from and adapt to new data without human assistance. That leads to more decision making and more actions, we may assume. It won’t be about storing data and making it useful to us. It will be more and more about using data to shape and then control our actions.

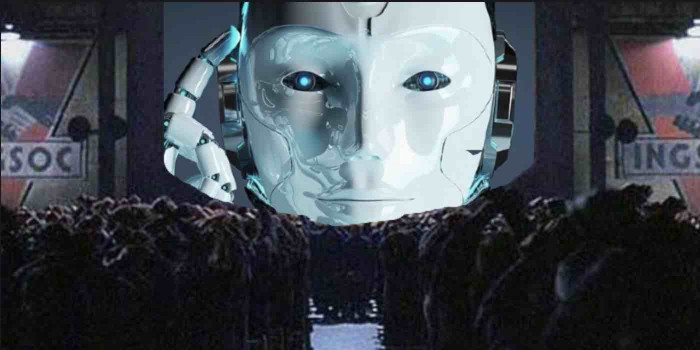

These capabilities are troubling, to be sure, but they lead us to the greater issue of accountability. For the vast majority of us, the power generated by AI is anonymous, completely out of reach. Do we want another faceless power source to control us?

Governments and their bureaucracies hold many decision making powers and grab more of that power every day. Why would we want to hand decision making or regulatory powers over to an even more obscure, faceless, and infuriating entity than government? Got a complaint about a charge on your credit card? Go to the AI portal, create an account, and file as many complaints as you like!

Many corporations have grown so large that they tell us what to do as consumers of their products. They don’t seek ways to give us better value or make us happier as consumers, but tell us what to do to make their corporate lives easier and more profitable. . . often at our expense. That’s upside down.

So how does AI’s lack of accountability affect us? Without accountability in our lives we can no longer be held responsible for our actions. When personal responsibility has been handed over to an anonymous entity, then we will have lost the essence of our humanity. Then it’s an animal world, reduced to stimulus and response.

In the trials at Nuremberg, the question often was, “Why did you do that?” Equally often the answer was, “I was just following orders.” Just think of all the ways people use rationalization to avoid personal responsibility. Maybe some of these examples sound familiar.

For people who don’t crave power over others, these excuses are terrifying. For those who do crave such power, they sound like variations on a warm bath.

The lack of accountability means that those who seek to rule us have an excuse for any action. It’s the will to wield power that makes it dangerous because the will to power over free people requires deceit. They need cover for their real agendas. They need excuses to take away freedom and responsibility. The incredible excuse: AI can do it better than we can do it ourselves. It knows better because it has better information, all the information. It’s the ultimate cloaking device for malfeasance. By handing decision making over to “objective” machines, it elevates the results, our orders, to a kind of unaccountable omnipotent omniscience.

Support Canada Free Press

In an odd kind of way, it’s exactly the same as the argument behind the Wisconsin Idea, the original basis of Progressivism. Don’t worry about handing over power to control people. It’s going to be experts, scientists, people who only want the best result for the public good that will be looking at these problems and giving us solutions. They won’t be political types, just experts. They’ll be able to look at everything objectively! They’ll weigh all the data and choose the best answer! And anyhow, it’s for your own good.

With accountability, like famous quotes, the name at the end makes all the difference. That name is a big part of the accountability. When I see the name I can begin to decide if this person ought to know something about the subject. We can look further to see if he actually does. We can find out if he made mistakes in the past, or if he enjoyed a career of unregulated evil. We can look up the history of the thing. What characteristics has it displayed in the past? What kind of record does it have? What’s the batting average?

But the ability to follow up, to check, to verify, to look up the footnotes will no longer exist. You won’t need to look stuff up. AI will know everything about everybody. It will have complete knowledge, so let’s just call it perfect knowledge or godlike knowledge. What could possibly go wrong?

At recent hearings, the people creating this monster told us it was a good thing overall, but that government should be involved to make sure it doesn’t become a bad thing. Wow. They’ll team up to make sure it works for the good of all. Well, that will make everything just dandy. I feel much better now. Go ahead and enslave us all but tell us after it’s too late. It’s for our own good, right?

So anyone wishing to avoid responsibility will find the capabilities of AI mesmerizing. For the rest of us the only sensible response to this isn’t just “No.” It’s “Oh, Hell no!”

View Comments

Dr. Bruce Smith (Inkwell, Hearth and Plow) is a retired professor of history and a lifelong observer of politics and world events. He holds degrees from Indiana University and the University of Notre Dame. In addition to writing, he works as a caretaker and handyman. His non-fiction book The War Comes to Plum Street, about daily life in the 1930s and during World War II, may be ordered from Indiana University Press.